Microsoft Fabric: Processing real-time data with Eventstreams

After giving an overview of Real-Time Intelligence in Microsoft Fabric in the previous article, today we’ll dive a bit deeper and take a closer look at Eventstreams.

Events and streams in general

Let’s start by taking a step back and reflect on what an “event” or “event stream” is outside of Fabric.

For example, let’s say we are storing perishable food in a warehouse. To make sure it’s always cool enough, we want to monitor the temperature. So, we’ve installed a sensor that transmits the current temperature once a second.

Whenever that happens, we speak of an event. Over time, this results in a sequence of events that—at least in theory—never ends: a stream of events.

At an abstract level, an event is a data package that is emitted at a specific point in time and typically describes a change in state, e.g. a shift in temperature, a change in a stock price, or an updated vehicle location.

Eventstreams in Fabric

Let’s shift our focus to Microsoft Fabric. Here, an Eventstream represents a stream of events originating from (at least) one source, which is optionally transformed and finally routed to (at least) one destination.

What’s nice is that Eventstreams work without any coding. You can create and configure eventstreams easily via the browser-based user interface.

Here is an example of what an event stream might look like:

Each Eventstream is built from three types of elements, which we’ll examine more closely below.

- ① Sources

- ② Transformations

- ③ Destinations

① Sources

To get started, you need a data source that delivers events.

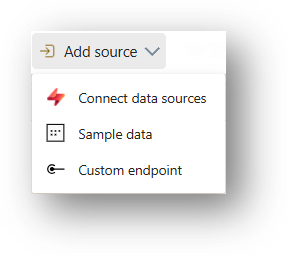

In terms of technologies, a wide range of options is supported. In addition to Microsoft services (e.g. Azure IoT Hub, Azure Event Hub, OneLake events), these also include Apache Kafka streams, Amazon Kinesis Data Streams, Google Cloud Pub/Sub, and Change Data Captures.

If none of these are suitable, you can use a custom endpoint, which supports Kafka, AMQP, and Event Hub. You can find an overview of all supported sources here.

Tip: Microsoft offers various “sample” data sources, which are great for testing and experimentation.

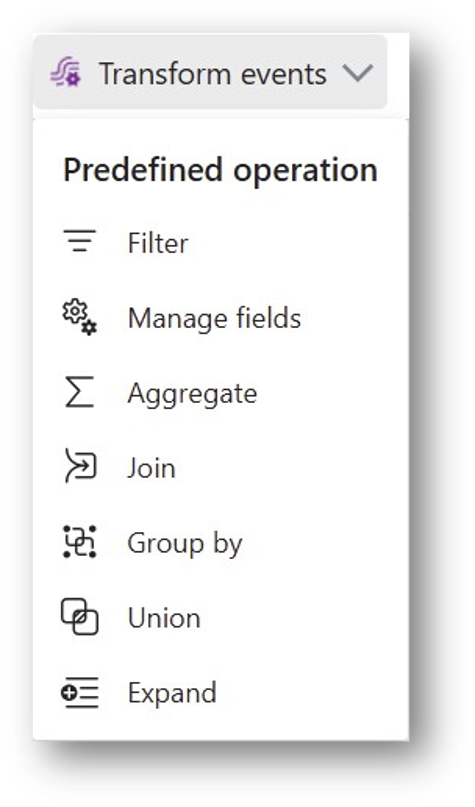

② Transformations

The incoming event data can now be cleansed and transformed in various ways. To do this, you append and configure one of several transformation operators after the source. These operators allow you to filter, combine, and aggregate data, select fields, and so on.

Example: Suppose the data source transmits the current room temperature multiple times per second, but for our planned analysis a one-minute granularity would be perfectly sufficient. So we use the “Group by” transformation to calculate the average, minimum, and maximum temperature for each 5-second window. This significantly reduces the data volume (and associated costs) before storage, while still preserving all the relevant information.

③ Destinations

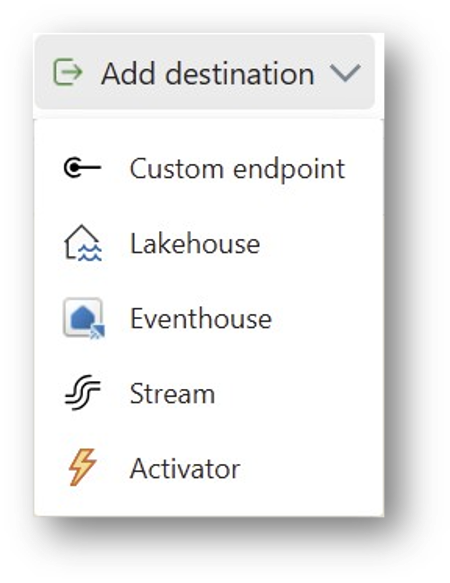

After all transformation steps are completed, the event data is sent to a destination. Most often, this is a table in an Eventhouse. The following destinations are supported:

- Eventhouses: An Eventhouse is a data store in Fabric that is optimized for event data. It supports continuous ingestion of new data and very fast analytics on that data. We will discuss Eventhouses in more detail in another blog post.

- Lakehouse: A lakehouse is Fabric’s “typical” data store for traditional (batch) scenarios. It supports both structured and unstructured data.

- Activator: An activator enables triggering actions based on certain conditions. For example, you could send an automatic email when the measured temperature exceeds a threshold. For more complex cases, a Power Automate flow can be triggered.

- Stream: Another event stream (a “derived stream”). This means you have the ability to chain Eventstreams, which helps break down complex logic and enables reuse.

- Custom Endpoint: As for the sources, you can also use a custom endpoint as a destination and thus connect any third-party systems. Kafka, AMQP, and Event Hub are supported here as well.

Event streams also support multiple destinations. This is useful, for instance, when implementing a Lambda architecture: you store fine-grained data (e.g. on a per-second basis) in an Eventhouse for a limited time to support real-time scenarios. In parallel, you aggregate the data (e.g. per minute) and store the result in a Lakehouse for historical data analysis.

Costs

Using Eventstreams requires a paid Fabric Capacity. Microsoft recommends at least an F4 SKU (monthly prices can be found here). In practice, the adequate capacity level depends on several factors, particularly the needed compute power, data volume, and total Eventstreams run time. Further details can be found here.

If you don’t need an Eventstream for some time, you can deactivate it to avoid unnecessary load on your Fabric Capacity. This can be done separately for each source and destination.

Author

Rupert Schneider

Fabric Data Engineer at scieneers GmbH

rupert.schneider@scieneers.de